Xiaotian: Designing AI as a Mental Health Infrastructure, Not a Substitute for Care

Project Snapshot

Project Snapshot: A human-AI hybrid mental health support system designed to navigate the fundamental tension between scalability and safety. This project operationalized “safety-by-design” in a real-world deployment, transforming a critical incident into a systematic framework for responsible escalation and boundary-setting.

I would rather see it as human–AI hybrid support system where safety is operationalized as workflow: clear boundaries, accountable escalation, and post-incident learning loops—so the system scales access without becoming a clinical substitute.

Core Challenge: How do we design an AI system that provides accessible, 24/7 support while rigorously avoiding the overreach of a clinical substitute, and ensuring seamless, accountable handoff to human care in crisis situations?

Users & context: High-stakes, privacy-sensitive mental health settings where access to human support is limited and safety must be operationalized.

System: AI reflective support + triage + human handoff

Guardrails: disclosure, scope boundary, crisis routing

Human partners: campus counseling / designated responders

Data: anonymized session logs + opt-in research feedback

Outcome: Real-world deployment at Westlake University, serving 10,000+ graduate students on campus.

My Role & Scope

Role:

I served as the primary translator between vulnerable user needs and responsible system design. My role was to embody the user’s voice in the product process, ensuring that every interaction—from the first disclosure statement to the crisis escalation protocol—was grounded in empathy and operational rigor.

I owned:

User research end-to-end, with emphasis on surfacing “unsaid needs” (privacy anxiety, hesitation points, trust barriers)

Translating insights into product language: entry points, disclosure, boundary-setting, escalation workflows, and human-in-the-loop handoffs

Post-incident review and safeguards refinement (operationalizing safety-by-design)

Deliverables: research synthesis, user journey & entry flows, disclosure/boundary copy, escalation protocol spec, post-incident review notes, disclosure/ethics playbook

Not my scope: clinical diagnosis or therapy decisions (handled by qualified professionals)

Create — Idea & Hypothesis

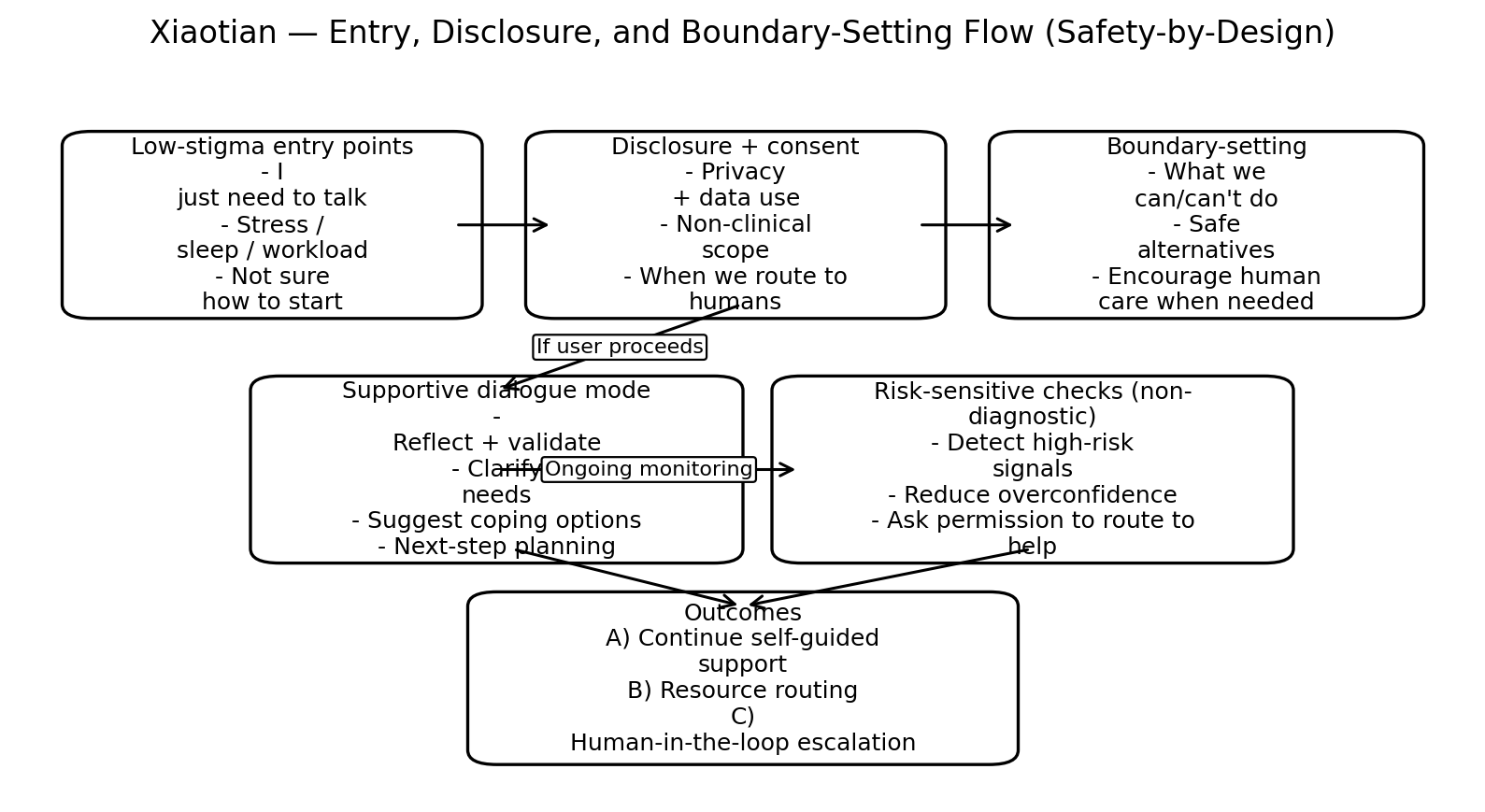

Mental health support is a structural access problem, not simply a “tool problem.” I framed Xiaotian as an infrastructure layer: a supportive companion that can listen, guide reflection, and connect users to human care when needed—within clearly defined boundaries.

Hypothesis: A human-AI hybrid workflow can extend timely support while remaining responsible if escalation paths and human oversight are designed into the system from the start.

Design — Research & Evidence

I led user research with a focus on latent concerns that users rarely state explicitly—fear of judgment, privacy anxiety, and hesitation to begin a vulnerable conversation. These insights shaped the system’s tone, entry points, and disclosure language. We also reviewed early interaction patterns at scale (large-session logs) to identify both effective supportive dialogue patterns and unsafe failure modes that required intervention.

employed a mixed-methods approach to map the landscape of risk and trust:

1. Qualitative Deep-Dives: Conducted sensitive, semi-structured interviews and diary studies to uncover latent fears (e.g., “Will this be used against me?”).

2. Quantitative Log Analysis: Systematically reviewed 150k+ session logs to identify “failure signatures”—conversation patterns that preceded disengagement, distress, or triggered escalation flags.

3. Synthesis: Translated these findings into design heuristics (e.g., “Anxiety peaks within the first 3 exchanges”) and risk taxonomies that directly informed our safety protocols.

Design — Insights → Decisions

Key decisions included:

Boundary-setting and disclosure: clearly communicate what the system can and cannot do

Safer entry points: design low-stigma ways to start a conversation

Human-in-the-loop escalation: define when and how conversations are handed off to human support, with clear accountability

Operational safeguards: ensure protocols and handoffs are workable outside ideal conditions

Iterate — Safety-by-Design as a Process

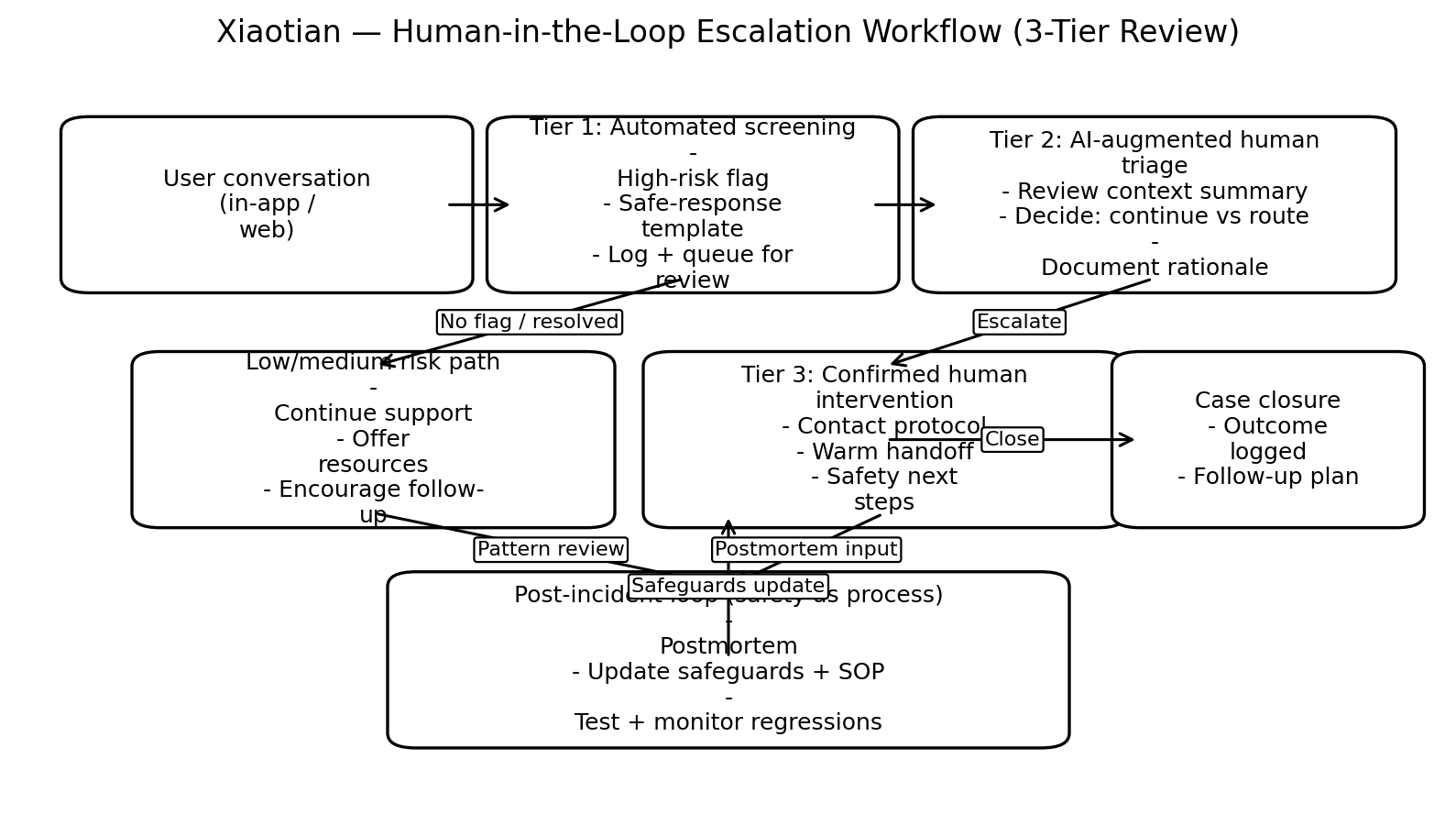

A critical moment occurred when the system surfaced signals that could indicate acute self-harm risk. We treated this not as an isolated event but as a systems improvement opportunity: we conducted a post-incident review, clarified handoffs and accountability, and strengthened human-in-the-loop safeguards so future outputs would be handled responsibly in practice.

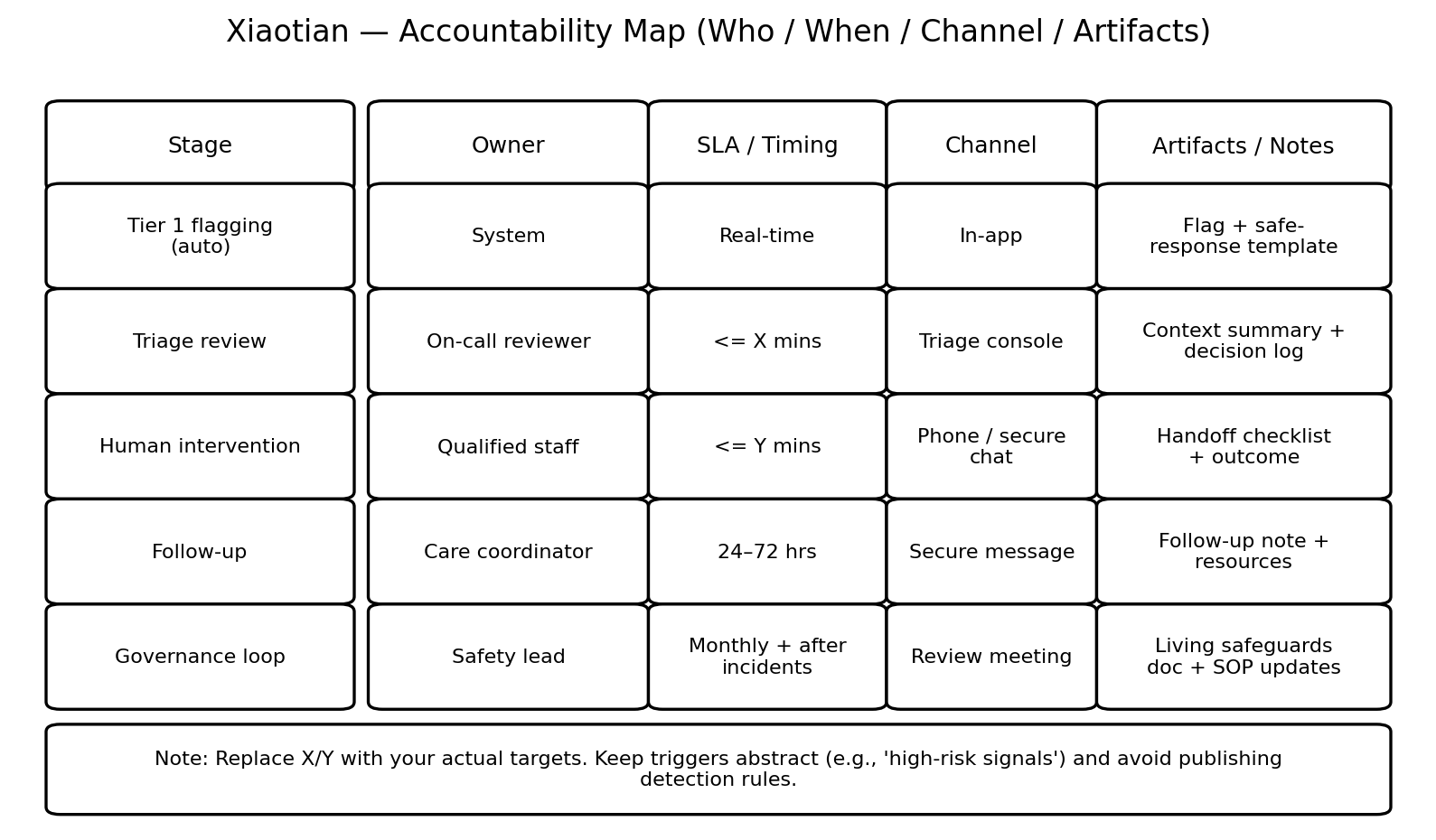

We instituted a three-tiered review system for high-risk flags: 1) automated filter, 2) AI-augmented human triage, 3) confirmed human intervention with clear SOPs.

We created a “Living Safeguards Document” that is updated after every major incident or monthly review, making safety an iterative product feature.

We defined clear accountability maps for handoffs, specifying who does what, within what timeframe, and with what communication tools.

Impact

We were able to deploy Xiaotian responsibly at Westlake University, where it has served more than 10,000 graduate students on campus.

Reflection & Design Principle Derived

This project taught me that in high-stakes HCI, “safety” is not a feature but a continuous process woven into the design lifecycle. The most critical design work often happens after launch, in response to real-world failures. It started my desire to study formal evaluative methods for AI ethics and human-AI collaboration frameworks, to move from crafting one responsible system to contributing to the field’s best practices.